The latest state-of-the-art acceleration technologies including the Alveo FPGAs, and Tesla V100 GPUs, closely coupled with server processors constitute the backbone of this cluster. The software stack consists of a complete ecosystem of machine learning frameworks, libraries and runtime targeting heterogeneous computing accelerators.

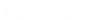

FPGA/GPU Cluster Software Stack

The FPGA/GPU cluster supports three the most commonly used deep learning frameworks, namely, TensorFlow, Caffe and MXNet. These frameworks provide a high-level abstraction layer for deep learning architecture specification, model training, tuning, testing, and validation. The software stack also includes various machine learning vendor-specific libraries that provide dedicated computing functions tuned for specific hardware architecture, delivering the best possible performance/power figure.

Applications

- Software IPs and applications targeting ML on heterogeneous computing systems (e.g. CNN, for object detection, speech recognition)

- Software stack including Parallel programming models, Compilers, Middleware, Runtime, Drivers and OSes

- Case studies: ML, Big data analytics, data-intensive computing, cybersecurity

- ASICs Prototyping: e.g., CMOS and other semiconductors, for implementing custom neural network accelerators

Resources

Benefits

- Secure remote access

- Machine learning frameworks: Tensorflow, Caffe and MXNet

- Support for deep learning training and inference

- Customizability: Select the right combination of accelerators for your application

- Reference designs using software stack for OpenCL, MPI heterogenous cluster computing

- Scalability: Create one node neural network graph and scale up by using more nodes

- Fast automated setup and configuration